When you want to run the same geoprocessing tool on many feature classes, one option is to right click the tool and select the batch option, but it can be tedious to add all your datasets to the list – the entry columns have to be widened to check that the paths have been entered correctly, and the parameters still need to be set for each row even if they are identical. Dragging and dropping, or copying and pasting paths takes time. If there is an error in one row, sometimes you will not be aware until the tool fails and has to be re-run.

Our goal: Write once, process many

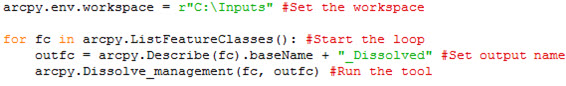

A few lines of Python can be of help. Here is an example of a python code block that loops through all the files in the folder ‘C:Inputs’, performs the ‘Dissolve (Data Management)’ tool on them, and then writes the output to ‘C:Outputs’.

The rest of this blog reviews the simple script above and looks at variations of it that can assist your batch processing.

We’d suggest that you write your Python script in IDLE or an equivalent Python Integrated Development Environment (IDE), and then copy and paste it into the Python Command window in ArcCatalog to allow the geoprocessing results to be recorded within your Arc session.

![]()

Step 1: Setting the workspace

The first line of our script defines the environment workspace parameter, which points at the folder or geodatabase that contains the input datasets.

![]()

The ‘r’ prefix makes Python treat the string it’s being given as a ‘raw’ string, meaning that it doesn’t attempt to analyse the string for what are called ‘backslash escape sequences’ – in this case this means you don’t have to change the folder path to include double back slashes instead of single ones.

Step 2: Looping through the input datasets

Next we use a ‘for’ loop to retrieve the datasets from the workspace:

For feature classes or shapefiles:

![]()

For rasters:

![]()

Note that ‘fc’ and ‘raster’ are arbitrary names – the script would work if all instances of fc were replaced with fc2.

Asides

1. Filtering the input datasets

If you want to only process datasets that contain a certain set of characters within the file name you can use the wild card. For example, to list all the datasets that have “Name” in the middle of the dataset name, you can use:

![]()

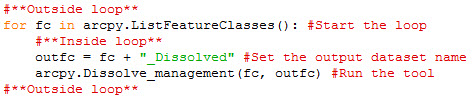

2. Setting indents for loops

Python uses whitespace to control loops. Once a loop has been defined, each line of the content of that loop has to be entered on an indented line, as shown in the screengrab below. To resume the script outside the loop a new line would be entered at the same level of indentation as the ‘for’ loop.

Step 3: Defining the output dataset names

The easiest way to define a meaningful dataset output name is to use the input name, which is held in the ‘fc’ variable in our examples. Unfortunately, when the input is a shapefile, the variable ‘fc’ will include the ‘.shp’ suffix. Happily, we can use the arcpy.Describe function to retrieve the suffix-less name for the input dataset, and then use this to create our new output name.

![]()

The Describe function works for all the types of data that can be used in ArcMap, so this line of our script can cope with (almost) anything we fire at it. As we’re writing the output to the same folder as we’re using for the input, we’ll need to make sure that the output name is different to the input one – in our script, ‘_Dissolved’ has been added as a suffix.

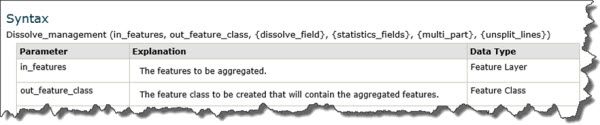

Last step: Running the geoprocessing tool

![]()

In our example, we’re running the dissolve tool on each input dataset. If we consult the help for the tool, we see that it requires an ‘in_features’ parameter, which corresponds to our ‘fc’ variable and an ‘out_feature_class’ parameter, which is the ‘outfc’ variable we have just defined. The other parameters are optional, and aren’t used in our script.

And that’s that – we’ve explained our script. Below are a few more ideas, that allow you to tailor the script to your requirements.

Going further

1. Defining another location for the output

If we want to write the output to a different folder, we can set the variable for that folder outside the loop. Note that we need to add ‘’ (double backslash) so that we can join the name of the file to the end of the folder – if we don’t, then no backslash will be added at all, and things will get a little confusing.

![]()

Note that because the output is being written to another folder, we can simply use the input dataset name for the output.

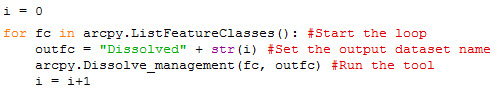

2. Using counters to name the output dataset

If we want to use a counter to name our output datasets – eg, create datasets with names like File1, File2, File3, then we need to enter a new variable, before the ‘for’ loop, to hold the count. We can then append this to our output dataset name by converting it from a number to a string using str(inputNumber), as shown below (if you don’t do the conversion, you’ll get an error). The last code line shown below increments the counter by 1, so that the next iteration of the loop creates the next dataset name in the sequence.

Conclusion

Using Python code from ArcCatalog can speed up batch processing for many ESRI tools; provide an amount of flexibility on output dataset names; and maintain a record of what we’ve done in the geoprocessing history.

If you’re interested in Python why not check out our previous blog post on Creating Layer Files with Python.

Posted by Tanya Knowles, GIS Technician, Exprodat.