Cultural data which E&P organisations rely on for exploration and commercial developments is ever-changing by nature. Status of leases change, as do company equities and stakes in existing and new blocks, number of wells and information about production.

Typically, cultural data is made available by the data suppliers as regular updates, often in an unstructured format, including shapefiles or file geodatabases, tables and .csv files, which need to be incorporated within the organisation’s corporate spatial data repositories. Datasets also need to comply with internal standards, not only in terms of data format, but also in term of naming conventions (for both feature classes as well as attributes), coordinate reference system and language.

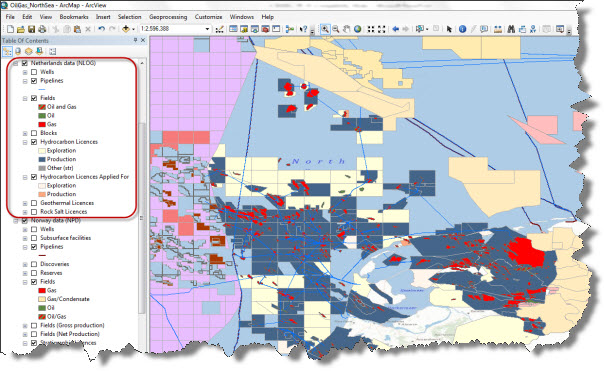

Figure 1 – Cultural data available in the public domain for the North Sea region visualised in ArcGIS Desktop

Tasks of this kind are quite repetitive and labour-intense, and need to be run on a regular, often monthly basis. This can become a real struggle especially for small E&P organisations, where the database management team may be really constrained in terms of resources. In such cases keeping track of where the data comes from, what data is current or obsolete, and ensuring consistency and overall data quality can become challenging.

At Exprodat we often help our clients to stay on top of all this. We typically take advantage of the powerful capabilities of Python scripting and Esri’s ModelBuilder to help automate such monthly updates.

Let’s take the example of an operator whose main interest is the North Sea region. Operators active in this geographic area can access a multiple data sources for cultural data, both in the public domain (provided by central government departments, often free-of-charge) as well as from commercial data sources (see Figure 2).

Figure 2 – Example data providers for cultural data in the North Sea region (commercial vendors have been omitted).

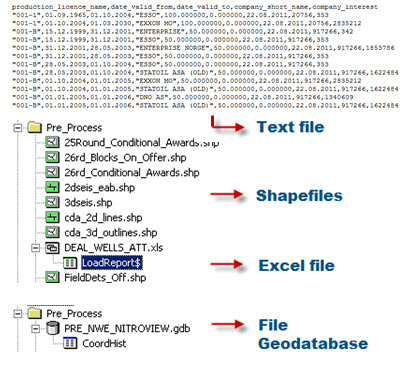

If you add to the number of sources the fact that each of them provides data in different formats, languages and coordinate reference systems (see Figure 3) it’s easy to understand how things can get a bit messy.

Figure 3 – Text files, Excel files, shapefiles and file geodatabases are all data formats used to deliver cultural data to users.

The cultural data geoprocessing infrastructure discussed here aims at ensuring the following:

- Consistency in feature class (and layer file) names, independent of the provider

- Consistency in attribute fields names and aliases

- Attributes are all in English

- A common coordinate reference system is used for all feature classes

- Metadata is kept up-to-date.

Data geoprocessing

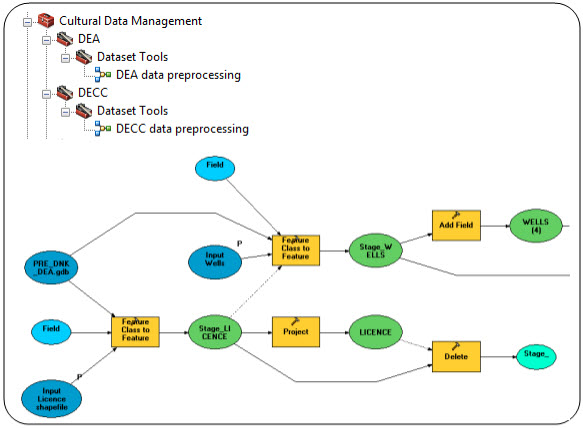

The set of tools used to build the automated data update infrastructure we discuss here uses Esri’s ModelBuilder to pre-process the datasets as they are downloaded from the providers’ websites. Python scripting is then used to handle the heavier geoprocessing tasks such as translating attributes into English, renaming fields, setting aliases and re-projecting to the corporate coordinate reference system.

The datasets which are made available by some data providers do not have consistent names and in addition may change without notice, potentially on a month-by-month basis(1). ModelBuilder (Figure 4) has the flexibility to take into account the occurrence of these variations. By exposing the name of the datasets as a parameter within the models I can make sure that the datasets for which the name has changed are processed without the person looking after the data updates needing to:

- Remember the specific bit of code that takes care of the update for that dataset,

- Remember where this code is stored, and

- Know which bit of the script needs to be changed.

This is particularly convenient when the required geoprocessing tools are native in ArcGIS, and already exposed as toolboxes.

Figure 4 – Example of the pre-processing Cultural Data Management toolbox and of one of the models

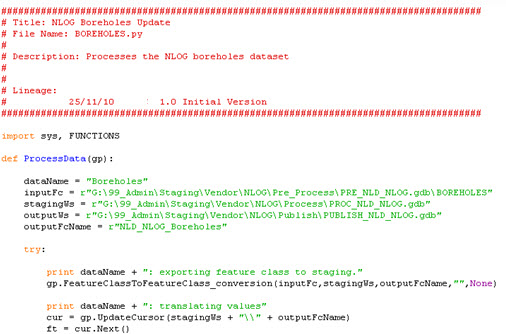

After the datasets have been pre-processed, Python scripting takes care of the most laborious tasks using advanced geoprocessing before the data is published to the corporate data repositories. Tasks like feature modification, attribute translation and creation of data archives are all tasks executed from within Python (Figure 5).

Figure 5 – Python scripting for advanced geoprocessing

Using python scripts allows you to schedule the processes to be run in batch mode during out-of-office hours, e.g. overnight or over the weekend. Windows Task Scheduler can be used to set up the sequence and the time when the scripts are run with no supervision. Log files are generated after each script is run, tracking the successful completion of the tasks or any problem that has been encountered.

A simple tool to ensure that metadata is also kept up-to-date completes the suite of tools. This tool parses the metadata files stored for each feature class/layer file at a given location in order to update the metadata, e.g. the dataset’s “last update” date.

Results

We have had very positive feedback from the clients where we have deployed the solution discussed here as it is relatively easy to implement and maintain. The solution also allows flexibility in the sense that it can accommodate new datasets that providers may make available down the line, even after the whole framework is implemented. It also requires close to no maintenance and, an element that may be significant for smaller E&P organisations, does not require any additional third-party applications or ArcGIS plug-ins.

To give you an idea of how long it takes to run the monthly data update from the sources indicated in Figure 2 (public and commercial), here’s some rough numbers:

- Acquisition/download from data sources: 2 to 4 hours (depending on the actual number of datasets updates available from each data source)

- Model-based data pre-processing: ½ to 1 hour

- Python geoprocessing (Python): 3 hours (done overnight or at weekend, no man/effort).

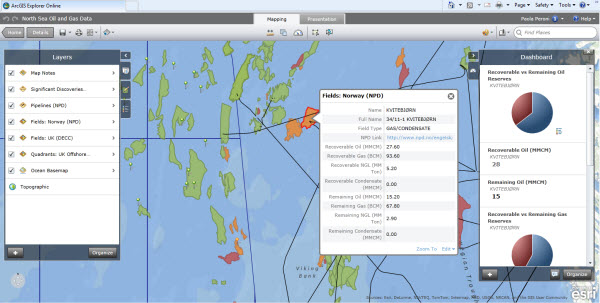

Up-to-date cultural data can then be used by GIS users with confidence and consumed in any GIS client, e.g. ArcGIS Desktop (Figure 1) or ArcGIS Explorer Online (Figure 6).

Figure 6 – Cultural data available in the public domain for the North Sea region visualised in ArcGIS Explorer Online(2)

Please contact Exprodat using the link at the bottom of this post if you are interested in finding out more about the solution presented here.

Posted by Paola Peroni, Senior GIS Consultant.

Notes:

- Some providers “attach” the date of the last data update (basically the cut for the vendor’s database) to the name of the feature class. So, for instance a field dataset updated at “Date A” would bear the name “Fields_DateA” (not the real name), while the update made available at a following date would be provided, for instance, as “Fields_DateB”.

- ArcGIS Explorer Online is the ESRI free online mapping tool which can be used to explore, visualise and share spatial information online. The North Sea Oil and Gas Data map shown in Figure 6 has been compiled by Exprodat using freely available E&P cultural data.